Serverless Inference

Hyperbolic’s Serverless Inference is an affordable AI inference platform designed for developers who need fast, serverless deployment of open-source models. It eliminates the complexity of managing GPU infrastructure, offering one-click deployment, low-latency inference, and full privacy control with zero data retention.

Overview

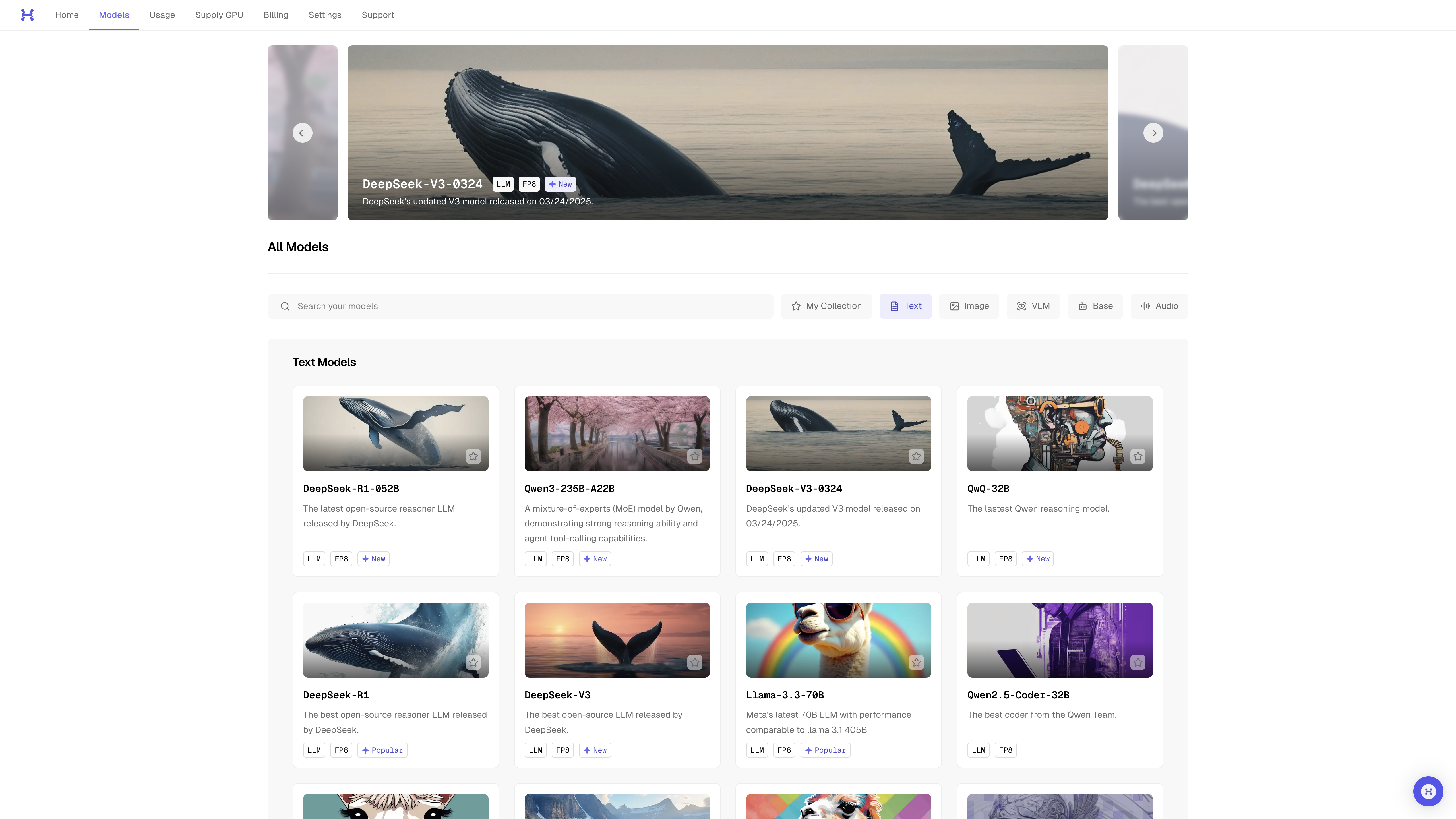

Hyperbolic supports 25+ open-source text, image, vision-language, and audio models, delivering inference at a fraction of the cost of major cloud providers while remaining fully API-compatible with OpenAI and other ecosystems.

Features

-

Zero Data Retention

All requests are stateless—your data is never stored or reused. -

Base vs. Instruct Models

- Base models are versatile completion engines, ideal for open-ended tasks.

- Instruct models are fine-tuned for direct commands and structured outputs.

Developer Tools & API Support

- Multi-Language SDKs: Generate API requests using Python, TypeScript, and cURL.

- API Playground: Test models before paying, with live adjustments for temperature, max tokens, and top-p.

- REST API: Access models via a Chat Completion-compatible REST API, with streaming support for token-by-token responses in chat applications

- Python & TypeScript: Fully OpenAI compatible, just swap your

api_keyandbase_urlto switch to Hyperbolic's models. - Gradio & HF Spaces: Deploy and interact with models using Gradio, with one-click deployment to Hugging Face Spaces for easy prototyping and shareable web interfaces.

Tiered Pricing

| Tier | RPM Limit | IP Limit | Min. Deposit | Support | Dedicated Instances | Fine-Tuning |

|---|---|---|---|---|---|---|

| Basic | 60 | 100 | $0 | No | No | No |

| Pro | 600 | 100 | ≥ $5 | No | No | No |

| Enterprise | Unlimited | Unlimited | Contact Sales | Yes | Yes | Yes |

Note: Each source IP is capped at 600 RPM to prevent DDoS.

For full details, visit app.hyperbolic.xyz/models or email [email protected].

Updated 4 months ago